In today’s ever-evolving digital landscape, artificial intelligence (AI) has become an integral part of our daily lives. From virtual assistants to self-driving cars, AI has greatly impacted the way we live, work and interact with technology. However, with the increasing use of AI, there is a growing concern about transparency and trust in the technology.

Addressing this concern, the Coalition for Health AI has taken a major step towards building trust in AI by offering its Applied Model Card on GitHub. This move has been applauded by experts and is seen as a significant milestone in the development of AI.

The Coalition for Health AI is a consortium of leaders from healthcare, academia, and industry, who have joined forces to promote safe, responsible, and trustworthy use of AI in healthcare. Its mission is to ensure that AI is developed and deployed in a way that benefits both patients and healthcare professionals.

One of the key challenges in the adoption of AI in healthcare has been the lack of transparency in AI algorithms. AI algorithms are complex and often difficult to interpret, making it challenging for healthcare professionals to understand how decisions are made. This lack of transparency has been a major barrier to building trust in AI, and the Coalition for Health AI is determined to address this issue.

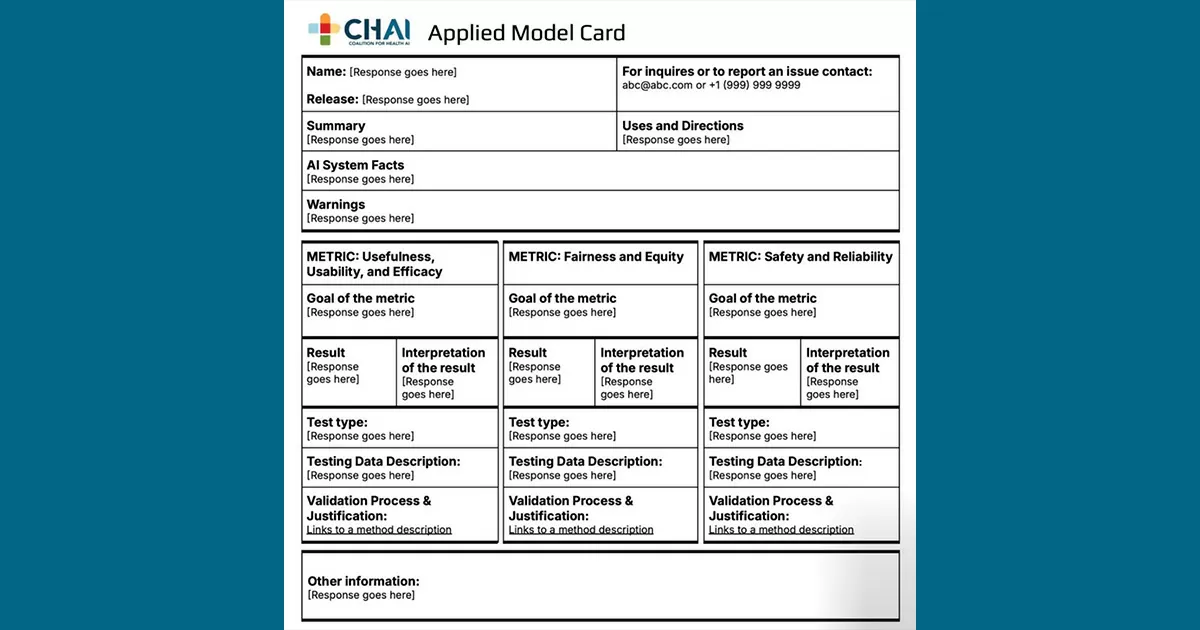

The Applied Model Card, a transparency tool developed by the Coalition for Health AI, aims to provide standardization and transparency in AI algorithms. The tool allows developers to document various aspects of their AI models, such as data source, algorithm type, performance metrics, and potential biases. This information can then be shared with users and regulators, enabling them to better understand how the AI model works and make informed decisions.

According to Dr. Brian Anderson, CEO of the Coalition for Health AI, this tool will help build “the kind of trust that we need” in AI. He believes that transparency is crucial for the responsible and ethical use of AI, and the Applied Model Card will play a crucial role in achieving this.

The decision to release the Applied Model Card on GitHub is significant, as it will enable developers to access and use the tool for free. GitHub is a widely-used platform for collaborative software development, and by making the tool available on this platform, the Coalition for Health AI has opened up the opportunity for developers from around the world to contribute to the development and improvement of the tool.

In addition to promoting transparency, the Applied Model Card also aims to address potential bias in AI algorithms. Bias in AI is a major concern, as it can perpetuate and even amplify existing inequalities in healthcare. By documenting potential biases in AI models, the Applied Model Card can help developers identify and address these biases, thus promoting fair and unbiased decision-making.

The release of the Applied Model Card on GitHub has been met with positive responses from experts in the field. Dr. Michael Wang, Director of Artificial Intelligence at Taiwan’s Chang Gung Memorial Hospital, believes that this tool will facilitate “better collaboration and communication between developers, users, and regulators.” This sentiment is echoed by many other experts, who see this as a significant step towards building trust in AI.

The Applied Model Card is not only beneficial for the healthcare industry but also for AI developers in other fields. The tool can be applied to any AI model and has the potential to promote transparency and trust in other sectors as well.

In conclusion, the Coalition for Health AI’s decision to release its Applied Model Card on GitHub is a major step towards building trust in AI. By promoting transparency and addressing potential biases, this tool can help ensure the responsible and ethical use of AI in healthcare. It is a positive move towards harnessing the full potential of AI and benefiting society as a whole. With the support of experts and developers from around the world, the Applied Model Card can pave the way for a more transparent and trustworthy future for AI.